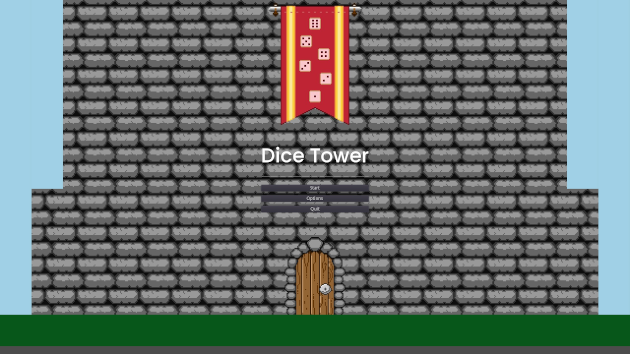

This July, Rebecca and I took part in Game Maker’s Toolkit Game Jam 2022 (an annual game jam hosted by the creator of YouTube channel Game Maker’s Toolkit, Mark Brown), making a game called Dice Tower in only fifty hours. It was our first game jam in a long time, having spent the last three years focusing (and failing) on making our “first big release”. Those fifty hours proved to be an intense, fulfilling experience, and I want to examine how things went. I’ll start with the goals that Rebecca and I made, continue into what happened during the jam, and conclude with whether I felt our goals were met and what we’ll do in the future.

Our Goals

First and foremost, Rebecca and I wanted to actually release the game! Our last jam effort, Rabbit Trails, never saw the light of day because we didn’t get the build submitted in time, and we were determined to avoid making that mistake again.

I wrote briefly about this experience as part of my retrospective on 2019.

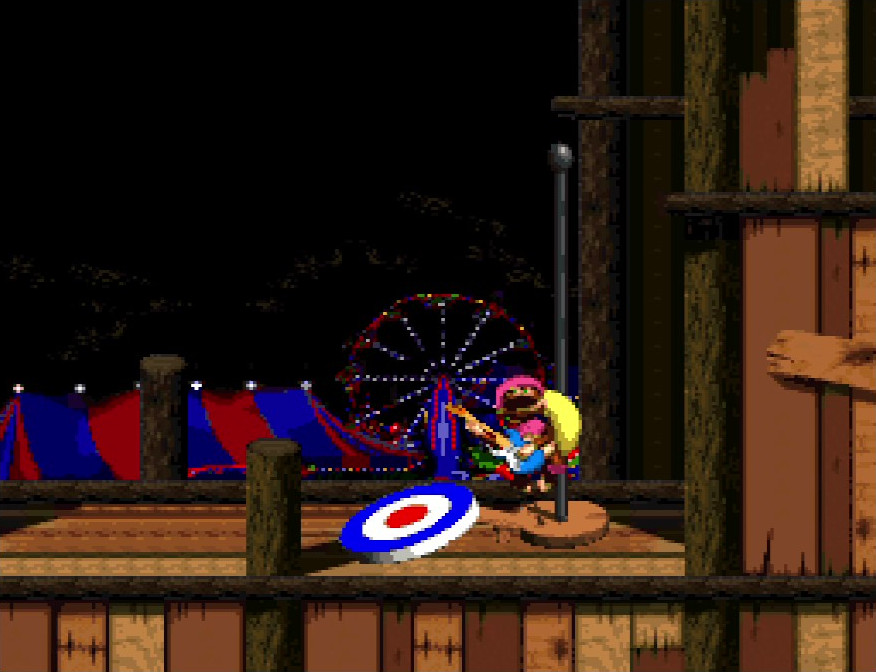

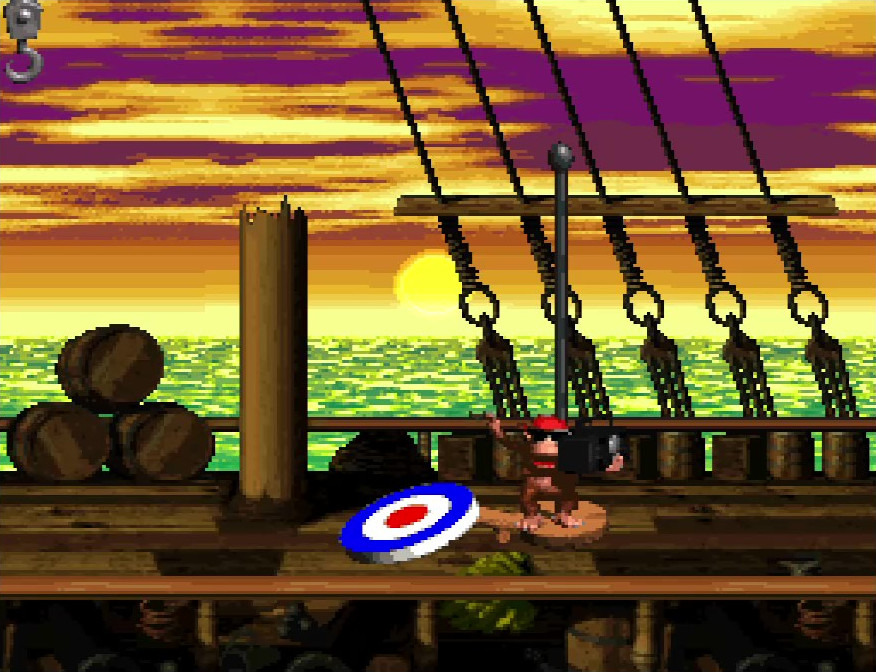

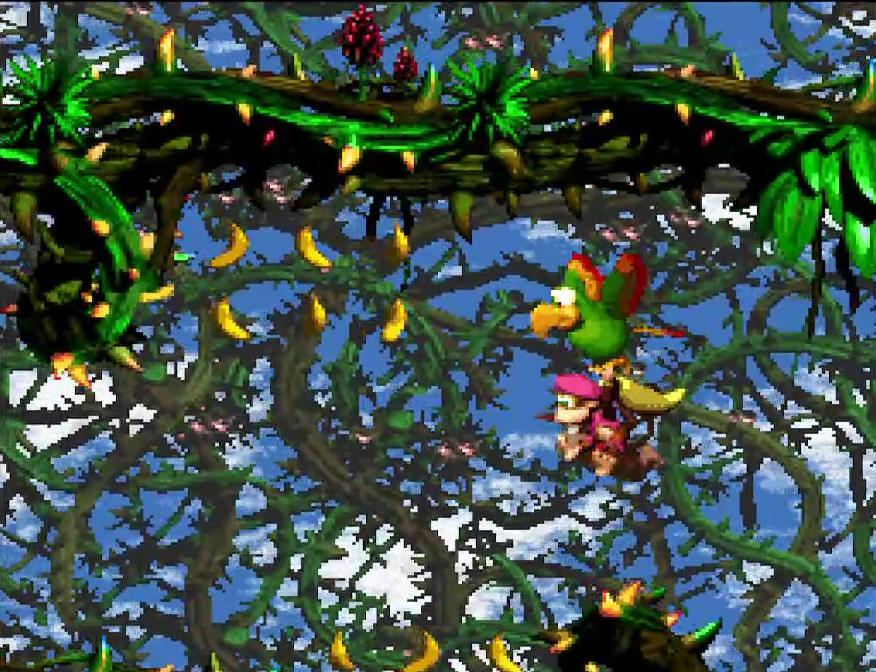

To that end, we explicitly wanted to scope our game small enough that it had a realistic chance of being completed by jam’s end. Further supporting that goal, we also decided that we would avoid trying to come up with something “clever”; we would be fine with coming up with feasible and fun ideas, even if they might be ideas that other people were likely to come up with. Finally, we determined that we would specifically make a 2D side-scrolling platformer, because that was the genre we were most familiar with; we didn’t want to waste time figuring out how to do a genre that we lacked experience in making.

Based on what had happened in previous jams, we had a few other goals we wanted to meet. Given that our previous games felt bland, we wanted to make sure whatever we made felt more polished and juicy, thereby increasing how good it felt to play. Previous jams had left us feeling burnt out and exhausted, and, while that is a traditional hallmark of game jams, we strongly wanted to avoid feeling that way at this jam’s end, so we made plans to limit how many hours we’d work per day so that we’d have time to relax and get our normal amount of sleep.

Separate from the in-jam goals, we had one ulterior objective: we wanted to see if the idea we came up with was good enough to develop further into a small release. The game we’d been working on, code-named “Squirrel Project”, was still very much in the early prototyping stage; we’d built and tried a lot of things, but made little progress in actually putting together a full game experience. Both of us felt concerned with how much time we were taking in making a “small game”, so we were interested in seeing if doing game jams might give us smaller concepts that would be easier to develop and release quickly. This jam would serve as a proof of concept for this theory.

Day One (Friday, 7/15/22)

The day of the jam arrived. Our son was off spending the weekend with relatives, we’d prepared our meal plan for the next couple of days, and I’d taken time off from my day job. We sat around my laptop, awaiting Mark Brown’s announcement of the theme. At noon, the video was released, and we saw the theme emblazoned on the screen:

Initial Planning

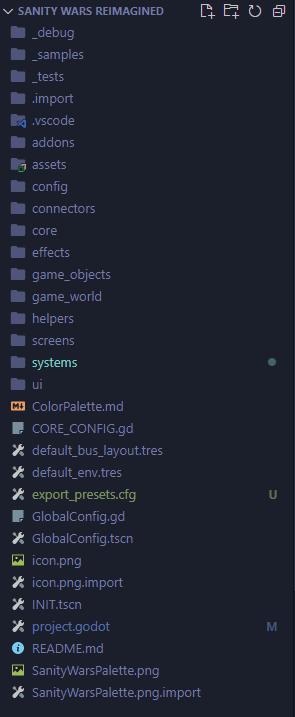

I hated the theme at first impression. One of my takeaways from working on Sanity Wars Reimagined (our first-ever full release) was that working with randomness in game design was hard, and now we had a game theme which strongly implied designing a game focusing around randomness. In my mind, it would be harder to design a game around dice that wasn’t random in some way. Concerned now with whether we would have enough time to make a good game, I started brainstorming ideas with Rebecca.

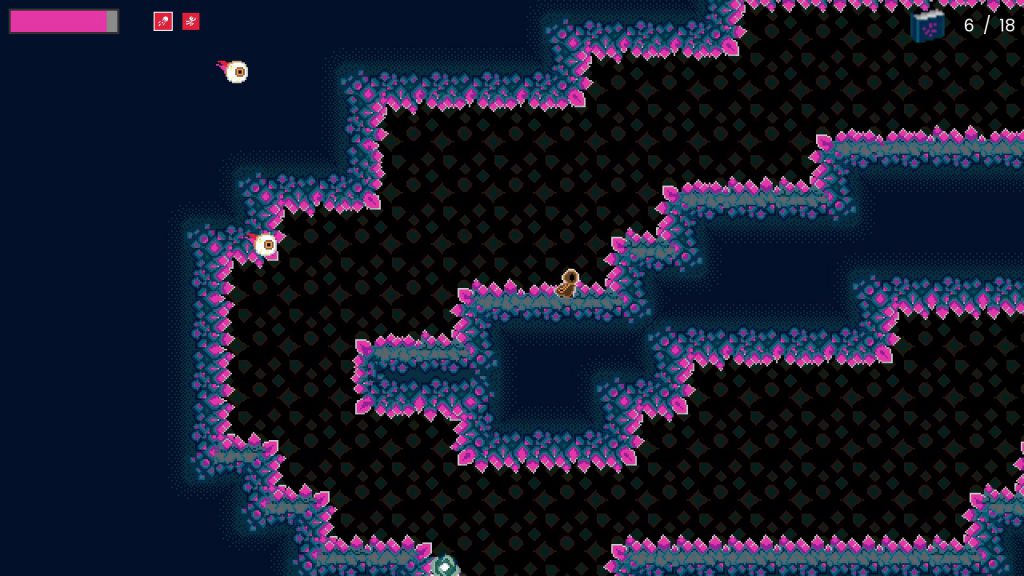

We spent that first hour working out an idea; the first idea we hit upon wound up dominating the rest of our brainstorming session, to the point that we didn’t seriously entertain anything else. The game would be a rogue-lite platformer, where you moved to various stations and rolled dice to determine which ability you got to use for the next section of gameplay. Throughout the level would be enemies that you could defeat to collect more dice, which would then give you a better chance to roll higher at the ability checkpoints, thereby increasing your odds of getting the “good” abilities.

I intentionally wanted to minimize the randomness of our game so that players felt they had some level of control over the outcomes of dice rolls, and I liked the idea of using dice quantity to achieve this outcome. The more dice you add to a roll, the more likely you are to get certain number totals. This is known as a probability distribution.

At the end of that hour, we formally determined that this was the idea we wanted to work with. Rebecca started crunching out pixel art, and I got to work making a prototype for our envisioned mechanics.

That Old, Familiar Foe

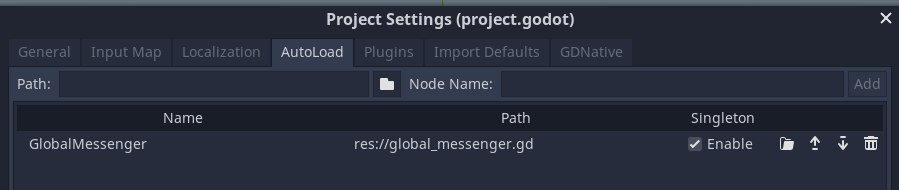

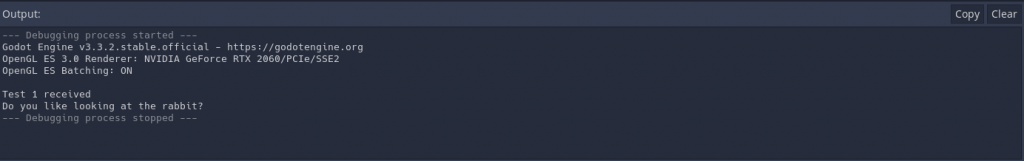

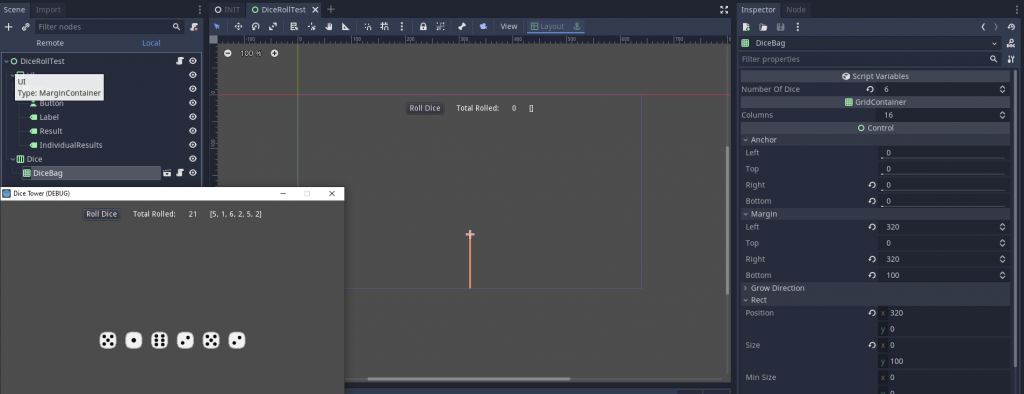

The next few hours of my life were spent working on the various elements that would serve as a foundation for our mechanics. I threw together some simple dice code, along with dice containers that could roll all the dice they were given. I also pilfered my player character and enemy AI code from Squirrel Project, to jumpstart development in those areas.

But something happened as I started trying to work out how I was going to make the player’s abilities work: my brain began to freeze up. This was a frighteningly familiar sensation: I’d felt this way near the end of developing the original Sanity Wars (for Ludum Dare 43). Dark thoughts started clouding my mind:

There’s not enough time to make this game.

You’re going to fail to finish it, just like your last game jam.

Is what you’re feeling proof that you’re not really good enough to be a game developer?

Slowly, I forced myself to work through this debilitating state of mind. After several more hours, I came up with a janky prototype for firing projectiles, and a broken prototype for imparting status effects on the player (like a shield). It occurred to me that if I was having this much trouble making something as simple (cough cough) as player abilities, then how was I going to have time to fix my glitchy enemy AI, and develop the core mechanic of rolling dice to gain one of multiple abilities, and make enough content to make this game feel adequate, let alone good. Oh, and then there was still bugfixing, sound and music creation, playtesting…

It finally hit me: This idea wasn’t going to work. There was simply too much complexity that was not yet done, and I was struggling with the foundational aspects that needed to be built just to try out our idea. There was no way I would be able to finish this vision of our game on time.

My mind in shambles and my body exhausted, I shared my feelings and concerns with Rebecca, and she agreed that we needed to pivot to a new idea. We tried to come up with something, but we were both too tired to think clearly. Therefore, we decided to be done for the evening, get some supper, and head to bed.

Note the lack of planned relaxation time.

As I lay in bed, I fretted about whether we could actually come up with a new idea. That first idea was by far the best of what few ideas we’d been able to come up with during our brainstorming session; how were we going to suddenly come up with an idea that was good enough and simpler? With these worries exhausting my mind, I fell asleep.

Day Two (Saturday, 7/16/22)

The next morning, at 6am, I woke up, took a shower, and played a video game briefly. This was my normal morning routine during the workday, and I was determined to keep to it. At 7am, I grabbed a cup of coffee and sprawled out on the couch, pad of paper and pen resting upon an adjacent TV tray, prepared to try and come up with a new idea.

Rebecca joined me at 7:30am, after she did her own waking routines.

The New Idea

I wrote down the elements we already had: Rebecca’s character art and tileset from her previous day’s work, some dice and dice containers, and a player entity that was decently functional as a platformer character. If our new idea could incorporate those elements, then at least not all of yesterday’s work would go to waste.

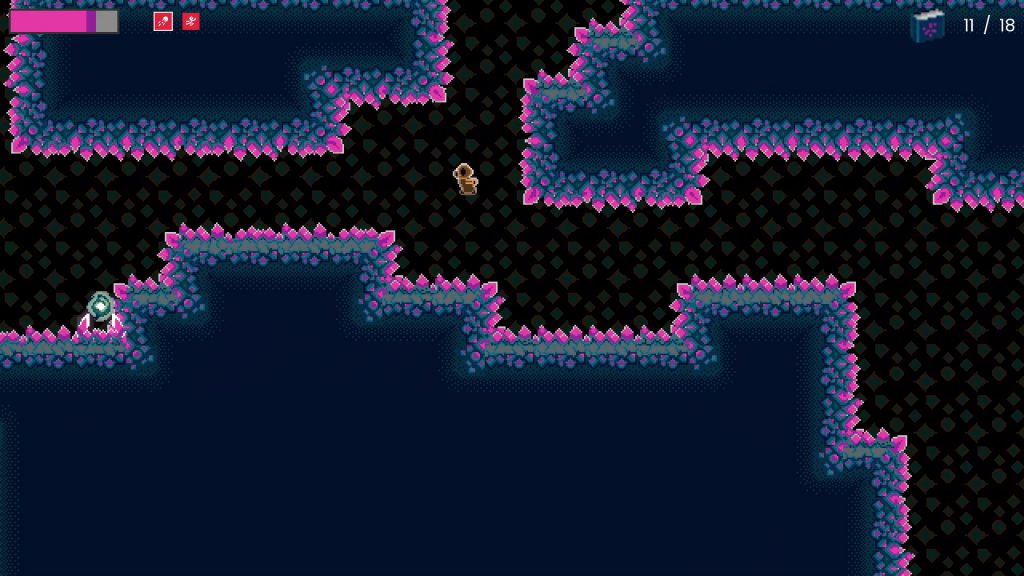

After thinking about it for a long while, I hit upon an idea: what if you rolled dice to determine how much time you had to complete a level? As you moved through each level, you could collect dice and spend them at the end-of-level checkpoints to increase your odds of getting more time to complete the next level. If that were combined with a scoring system involving finding treasure collectibles that were also scattered throughout each level, then there’d be potentially interesting gameplay around gambling how many dice you’d need to collect to guarantee that you had enough time in each level to collect enough treasure to get a high score.

I pitched the idea to Rebecca, and after some discussion about that and a few other ideas, we decided this was the simplest idea, and therefore had the best chance of being completed before the deadline. Fortunately, this idea also did successfully incorporate most of the elements that we’d worked on yesterday, so we didn’t have to waste time recreating assets. On the other hand, this idea needed to work; there was likely not going to be any time to come up with another plan if we spent time on this one and it failed.

Our idea and stakes in mind, Rebecca and I once more commenced our work.

Slogging Through the Day

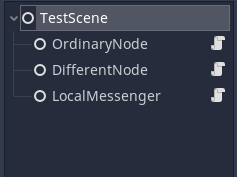

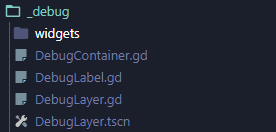

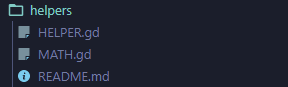

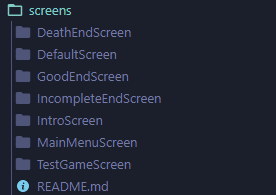

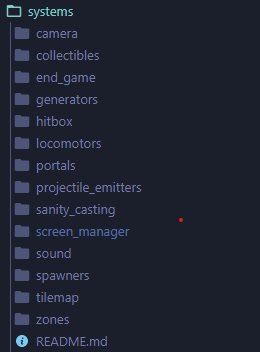

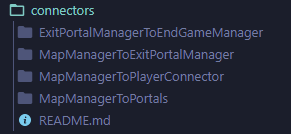

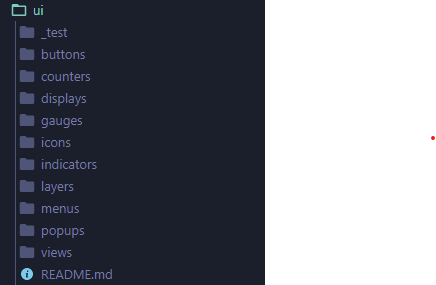

I created various test scenes in Godot. Slowly, I began to amass the individual systems and entities that I’d eventually put together to form the gameplay. Throughout the day, I felt very sluggish and lethargic mentally; this was likely a side effect of the burnout I’d put myself through yesterday. I kept reminding myself that some amount of progress was better than none at all, but it still didn’t feel great.

By the start of that evening, I had a bunch of systems, but nothing that fully integrated them. Taking a break, I went outside for a walk and some breaths of fresh air. As I trod the trails in our neighborhood, I worked out the game’s next steps. I reasoned that if I continued to put each system and entity together in isolation and test them, I stood a realistic chance of running out of time to put everything together into a cohesive game. Therefore, I decided to forgo this approach, and simply put everything together now, and build the remaining systems as I went along. This flew in the face of how I traditionally prefer to program things, but I resolved to set aside my clean code concerns and focus simply on getting the game working.

The Late Evening Dash

Not long after I returned from my walk, it became 7pm. This was the twelve-hour mark, and in our pre-jam plans I’d determined that I wouldn’t work more than twelve hours on Saturday. Yet I still didn’t have much that was actually put together. I was now faced with a conundrum: should I commit to stopping work now, and risk not having enough time tomorrow to finish the minimum viable product?

Ultimately, I decided that I would try to get an MVP done tonight, or at least keep working until I felt ready to stop. Three hours later, while that MVP was still incomplete, I had put together the vast majority of what was needed to make the game playable: a level loading system, the dice-rolling checkpoints, dice collection (though said dice weren’t yet connected to the checkpoints), the level timer and having it set from rolling the checkpoint dice, rudimentary menu UI, and player death. At this point, I felt that continuing to work would just cut into my sleeping time, and I was still determined to get a proper amount of sleep. At the least, those few hours had produced enough progress that I felt confident that I could finish things up tomorrow morning.

I quickly prepared for bed, and after watching some YouTube to wind down I fell asleep.

Day Three (Sunday, 7/17/22)

That morning, I woke up at 6am, as usual, but this time I skipped all of my morning routine save the shower. By 6:30am, I was at my computer and raring to go.

Blazing Speed and Fury

The first thing I did was export the game as it currently was. I’d been burned enough times by last-minute exports that I was determined to make sure that didn’t bite me in the butt this time. Fortunately, this time there were no export-specific crashes or bugs, so I resumed work on the game proper.

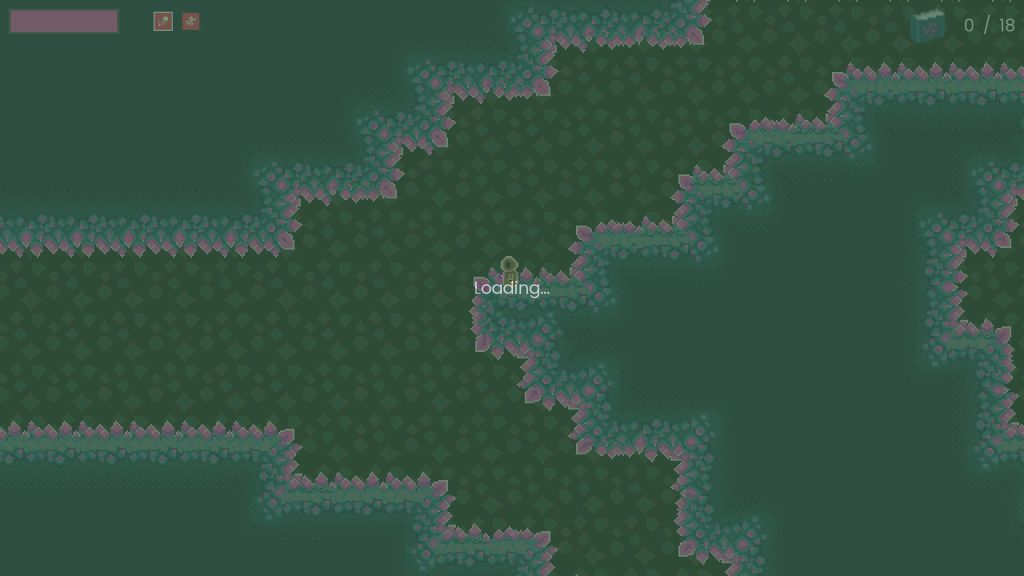

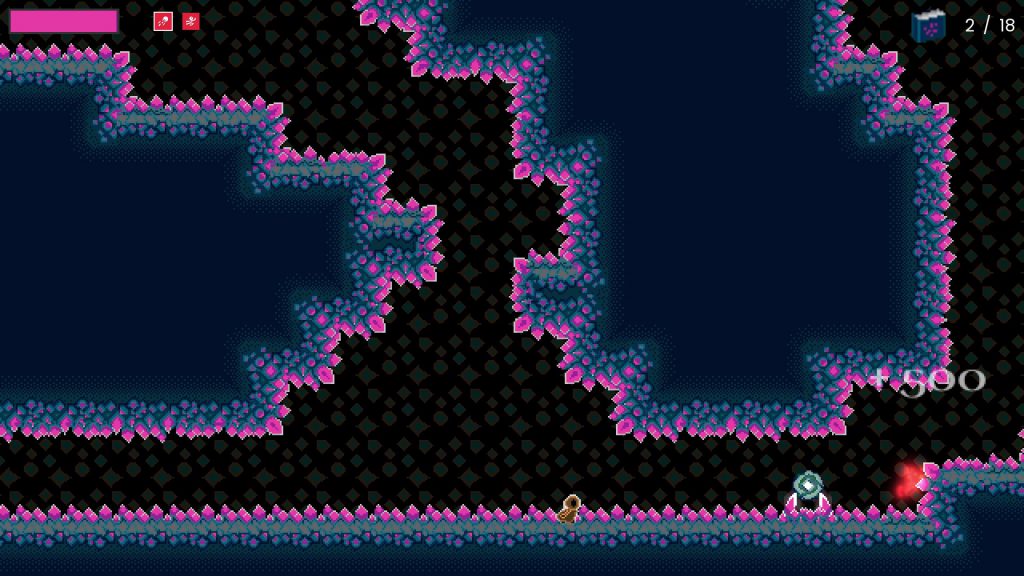

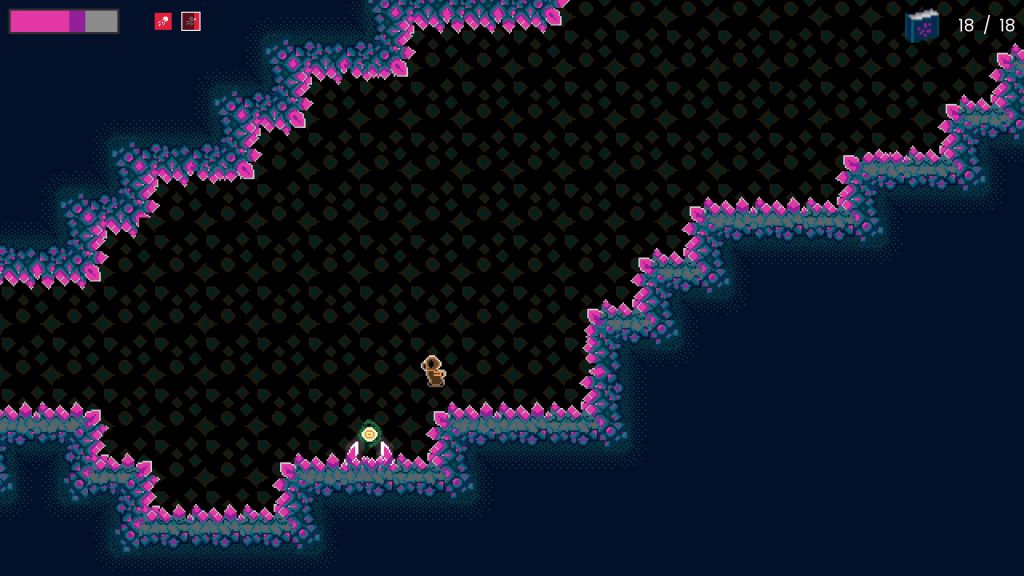

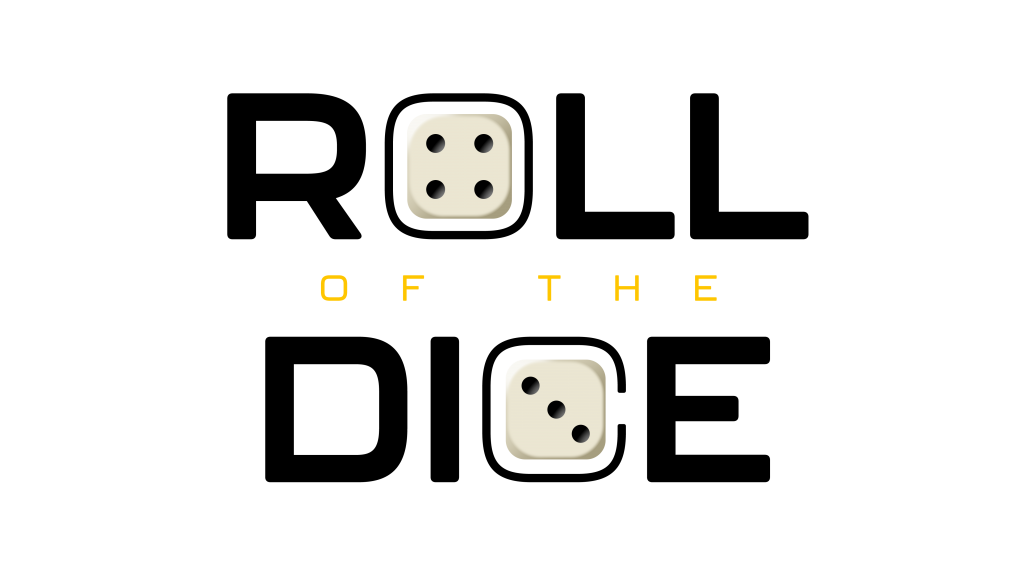

For the next several hours, my mind singularly focused on getting this game done. I finally made the checkpoints accept the dice you collected, thus completing the core loop of rolling dice to determine the time you had to make it through the level. I added game restart logic, added transition logic for when the player was moving between levels, added endgame conditions and win/loss logic, and fix various bugs encountered along the way. I also realized that I wouldn’t have time to implement a scoring system, so I unceremoniously cut it from the MVP features.

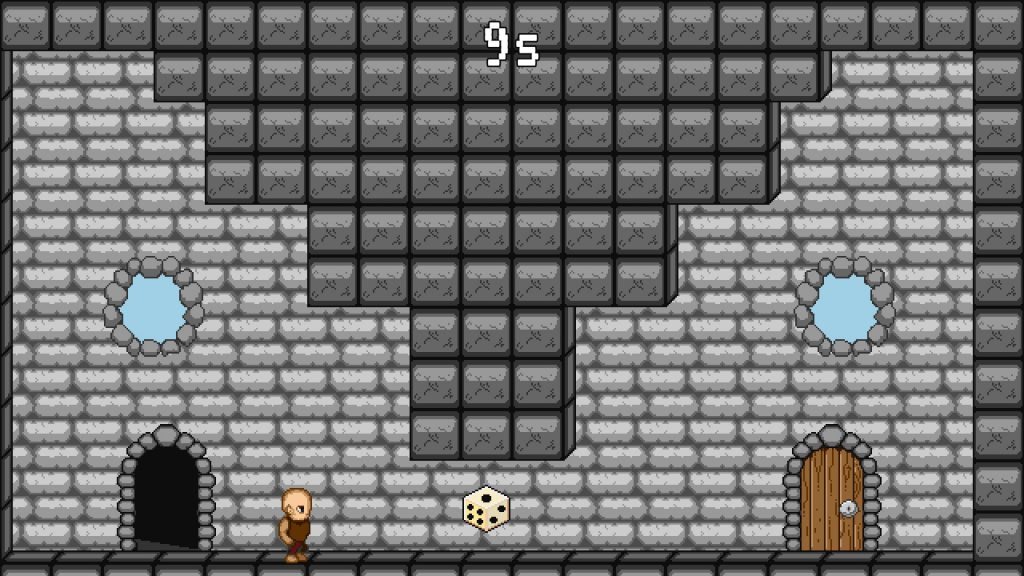

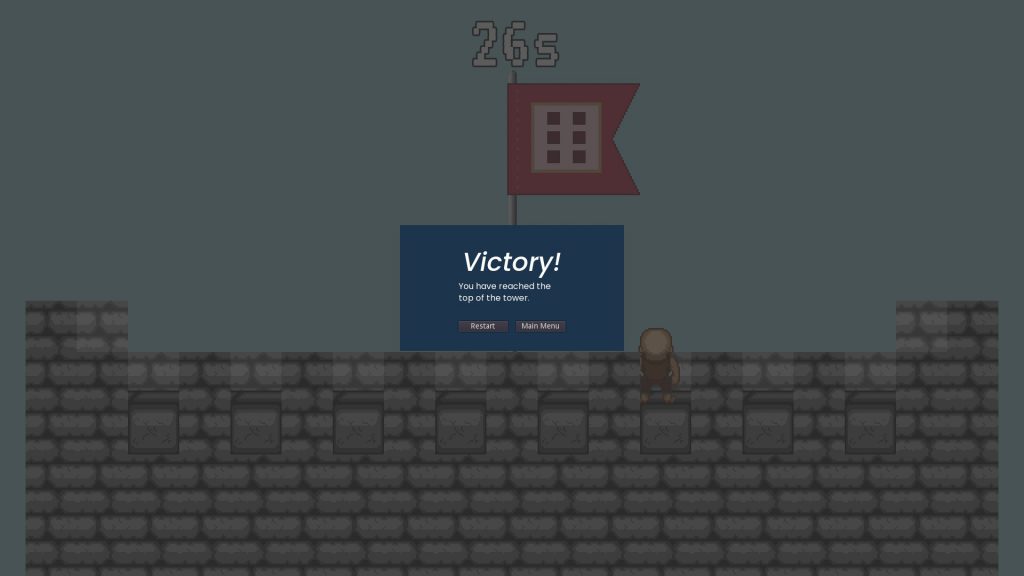

Around noon, I finally had the game fully working. I could boot the application, start a new game from the main menu, play through all the designated levels, and successfully reach the victory level to win the game (or run out of time and lose). If nothing else, we at least had a playable game!

Final Push

The game jam started at noon on Friday, and as it was now noon on Sunday that meant 48 hours had passed. Thankfully, the jam had a two-hour grace period for uploading and submitting games to Itch.io. That meant I had less than two hours to jam as much content and polish into the game as I could before release!

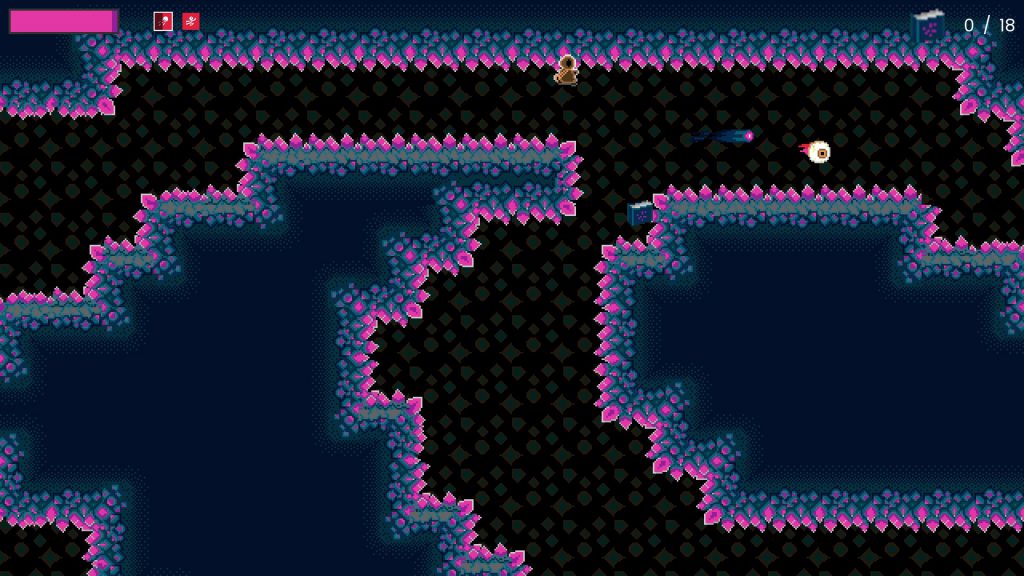

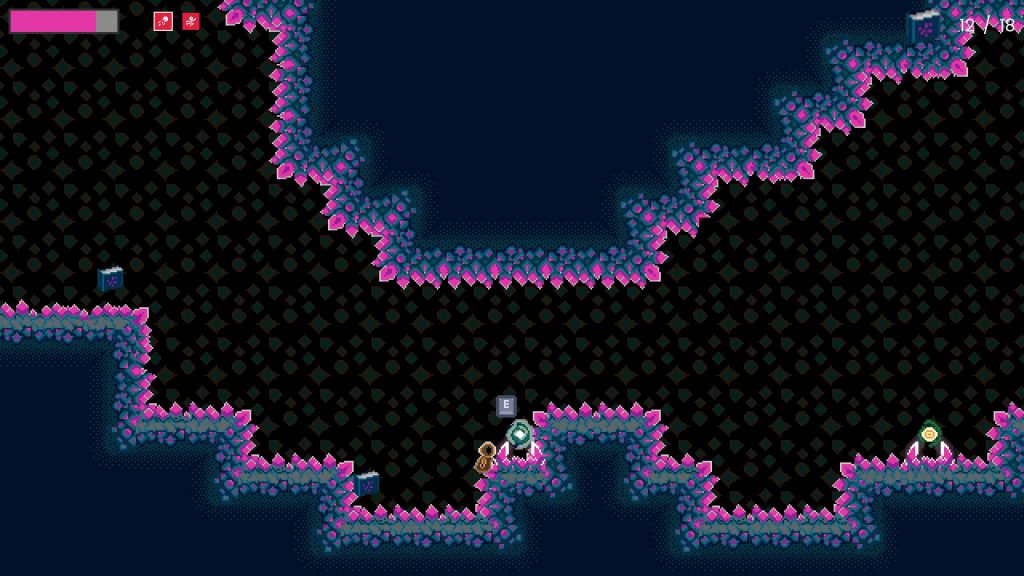

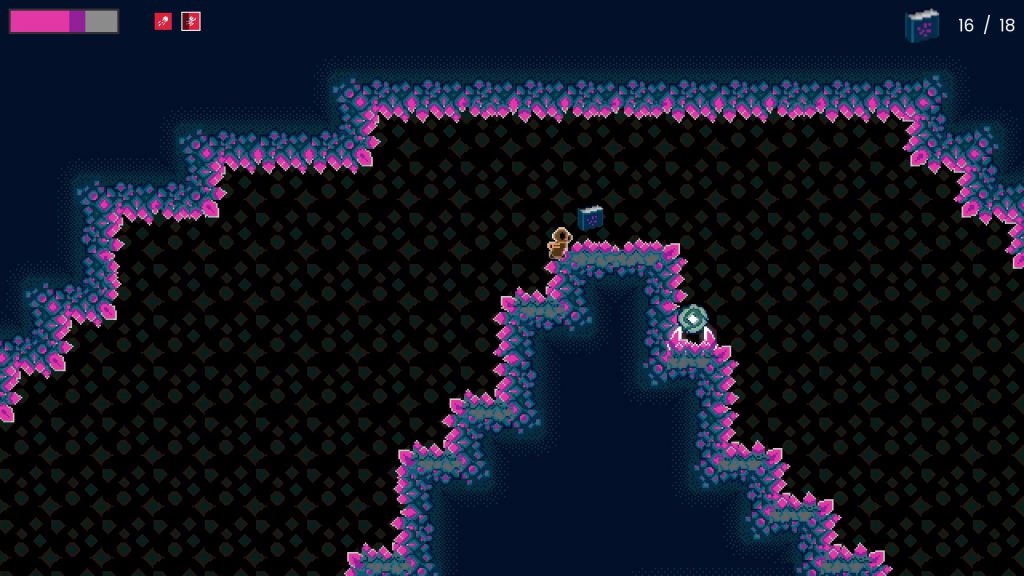

I blazed my way through creating six levels, spending less than an hour to do so. Of course, that meant I had little time to balance the levels properly, beyond ensuring they could be completed in an average amount of time. One thing I did spend time on was adding the various pieces of decorative art Rebecca made to each level. It might seem frivolous to add decorations, but I think having a nicely-decorated level goes a long way towards breaking up level monotony and sameness, and honestly it didn’t take that long for me to add those things.

After a few trials, I hit upon a solution for balancing the randomness for the dice checkpoints: I’d give the player six free seconds at the start of each level, and a single die at each checkpoint (in addition to the ones collected through gameplay). As I playtested the level, that at least felt long enough that, using probability curves, the average player could have a decent chance of finishing each level.

Intentionally, I chose to not focus at all on adding sound effects and music. My reasoning went thusly: players would probably prefer to have more content to play through than have sound and music with very little content. It made me remorseful, because sound can make an okay game feel great, but there just wasn’t enough time to do a good job of it, so I decided it was better to just cut audio entirely.

Finally, at 1:30pm, I looked at what we had and determined it was good enough. Rebecca had been working on setting up our Itch.io page, and I gave her the final game build export to upload to the store page. We submitted the game just in the nick of time; shortly after our submission was processed, Itch.io crashed under the weight of thousands of last-minute uploads. While this server strain prevented us from adding images for our game page (we got them in later), at least we already had the game uploaded and submitted, so we weren’t in danger of missing the deadline.

Rebecca and I looked at each other. We’d done it! We’d successfully made a game and submitted it! We spent the rest of the afternoon out and about on a date, taking advantage of our last bits of free time before returning to parenthood the next day.

Feedback

Over the next week, our game was played and rated by people. We received multiple comments about how people enjoyed the core idea, which was encouraging.

A couple of our friends from the IGDA Twin Cities community took it upon themselves to speedrun our game. This surprised me, because I thought the inherently random nature of our game would be a discouragement from speedrunning. They told me they enjoyed it a lot, however, and over the course of that week they posted videos of ridiculously speedy runs and provided good feedback.

By happenstance, the Twin Cities Playtest session for July was that same week, so Rebecca and I submitted Dice Tower to be played as part of that stream. Mark LaCroix, Lane Davis, and Patrick Yang all enjoyed the game’s core concept, and gave us tremendous feedback on where they felt improvements could be made, as well as different directions we could go to further expand and elaborate on the core mechanic. It was a great session, and we are greatly indebted to them for their awesome feedback.

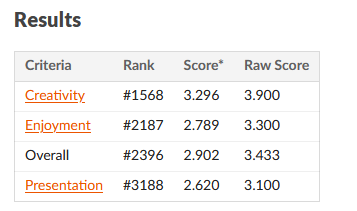

After the play-and-review week had passed, Mark Brown made his video announcing the winners of the jam, and we got to see our final results online (we did not make it into the video, which only featured the top one-hundred games).

For a game jam with over six thousand entries, we did surprisingly well, placing in the top 50% in overall score. Our creativity score was in the top third, which pleased me greatly; it felt like further validation that our core concept was good enough to build on.

Reflection

I want to circle back to those goals Rebecca and I set before the jam, and see how we did in meeting them. There were a couple of other takeaways I had as well, which I’ll present after looking at the goals.

Did We Meet Our Goals?

First and foremost, we wanted to release a game, and we did! That was huge for us, given our last effort died in development. In particular, to release this game after the mental fracturing I endured on Friday night, and having to pivot to a new idea, shows that, perhaps, we’re decent game developers, after all.

Did we scope our game properly? At the beginning, no, definitely not. Fortunately, we recognized this (albeit after spending a day on it), and decided to change to a more feasible idea. Even though we had to cut scope from the new idea as well, the core was small enough that we were able to make it in the time allotted. It gives us a new baseline for how much work we can fit in a given amount of time, which should serve us well in future endeavors.

It’s a similar story for our theme interpretation. Our first idea seemed simple, but turned out to be complex under the hood, as it’s a lot of work to not only add lots of different player abilities, but game entities (such as enemies) to use those abilities on. In hindsight, I laugh at how we thought that kind of game was feasible in 48 hours, with not nearly enough foundation in place beforehand. That said, once again, we pivoted from the complex to the simple, and the new theme interpretation was simple enough to be doable.

Ironically, I thought this would be a common enough idea that plenty of other people would do it, but I actually never encountered a mechanic similar to this during my plays of other jam games, and multiple people commented on the novelty of the idea. Perhaps our focus on coming up with a good idea instead of a unique one managed to get us the best of both worlds!

We stuck to our guns and made a 2D side-scrolling platformer, even though at times I felt like that made it more difficult to find a good theme interpretation. Because we knew how to work in that genre, it made our mid-development pivot possible; I don’t think we could have been successful in doing that if we’d tried to make something unfamiliar to us. Additionally, I think using a genre where dice are less commonly used led to coming up with something more unique than we might’ve if we’d done an RPG or top-down game (two genres I thought would be easier fits for the theme).

One area we did miss on was making this a polished release. The missing audio was sorely felt. That said, I really like the art that Rebecca came up with, especially the decorations, and I don’t regret the decision to cut sound in favor of making levels and placing her doodads. Honestly, despite the missing audio, the fact that this game was fun and playable made this jam release feel more polished than our previous jam efforts.

A big success we had was in self-care management. Even though we didn’t stick to the maximum hours per day we set before the jam, we took care to make sure we got enough sleep each night. The end result is that this is the first jam we’ve done that both of us didn’t feel utterly spent at the end of it. That allowed us to enjoy a lovely afternoon and evening together as a couple, and we didn’t feel totally shot for days thereafter. I think that also helped us have the energy needed to push us through to the finish line.

One final goal remains to be evaluated: was our game good enough to base a future release upon? The answer is “yes”. Based on the enthusiastic feedback we received, plus our own thoughts about where the concept could go, we’ve decided to put Squirrel Project aside and focus on making a full, but small, release out of Dice Tower. With some more features and polish, and maybe a dollop of background story to tie things together, we think Dice Tower could make a good starting point for our first paid release.

Additionally, we plan to take part in future game jams, and further explore the concept of making quick games to find small ideas with good potential.

Other Takeaways

There were some additional things we learned from the jam. These emerged as byproducts of the things we tried during the jam.

Our initial thoughts were that we’d spend our brainstorming session coming up with multiple ideas, and then make small prototypes for each of them and decide which of them was the one we wanted to make. It didn’t turn out that way; both our first and second attempts settled early on a single idea, to the point where we didn’t really have many other ideas that we seriously considered. I think that perhaps that’s just how Rebecca and I work; it’s easier for us to jump into an idea and try it rather than piece a bunch of ideas together and flesh all of them out on paper. Perhaps in our next jam, then, we’ll deliberately settle on an idea right away and just go for it, making explicit allowance for pivoting if it starts feeling like too big an idea to work with.

Personally, I also learned that I need to let go of trying to test every little thing in isolation. That may be a good approach for building long-lived, stable systems, but for something as volatile as game prototyping it just slows the process down too much, with no game to show for it. By putting things together as early as possible, it makes things feel more real because it is the real game! I’ll keep this in mind for future projects, to just make a go of it right off the bat, and save my efforts to make things nice and tidy for when the ideas are codified and made official.

Conclusion

Ultimately, I’m glad Rebecca and I decided to participate in GMTK Game Jam 2022. It feels exhilarating to not only finish a game in that short amount of time, but to defeat some of my internal demons from previous jams in the process. We feel more confident in our ability to develop and release games, and we’re excited to tackle making the full release of Dice Tower.

It’ll be interesting to compare this postmortem with the postmortem for whatever that game becomes!

In the meantime, though, feel free to try out our jam release of Dice Tower and let us know what you think!